Natural Language Acquisition in Recurrent Neural Architectures

Stefan Heinrich

Thesis: PhD thesis, Department of Informatics, Universität Hamburg, Hamburg, DE, Jun 2016

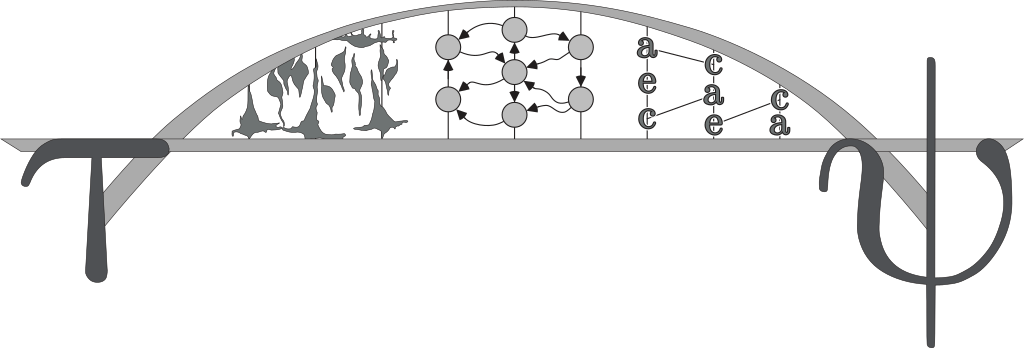

Abstract: The human brain is one of the most complex dynamic systems that enables us to communicate (and externalise) information by natural language. Our languages go far beyond single sounds for expressing intentions - in fact, human children already join discourse by the age of three. It is remarkable that in these first years they show a tremendous capability in acquiring the language competence from the interaction with caregivers and their environment. However, our understanding of the behavioural and mechanistic characteristics for the acquisition of natural language is - as well - in its infancy. We have a good understanding of some principles underlying natural languages and language processing, some insights about where activity is occurring in the brain, and some knowledge about socio-cultural conditions framing the acquisition. Nevertheless, we were not yet able to discover how the mechanisms in the brain allow us to acquire and process language. The goal of this thesis is to bridge the gap between the insights from linguistics, neuroscience, and behavioural psychology, and contribute an understanding of the appropriate characteristics that favour language acquisition, in a brain-inspired neural architecture. Accordingly, the thesis provides tools to employ and improve the developmental robotics approach with respect to speech processing and object recognition as well as concepts and refinements in cognitive modelling regarding the gradient descent learning and the hierarchical abstraction of context in plausible recurrent architectures. On this basis, the thesis demonstrates two consecutive models for language acquisition from natural interaction of a humanoid robot with its environment. The first model is able to process speech production over time embodied in visual perception. This architecture consists of a continuous time recurrent neural network, where parts of the network have different leakage characteristics and thus operate on multiple timescales (called MTRNN), and associative layers that integrate embodied perception into continuous phonetic utterances. As the most important properties, this model features compositionality in language acquisition, generalisation in production, and a reasonable robustness. The second model is capable to learn language production grounded in both, temporal dynamic somatosensation and temporal dynamic vision. This model comprises of an MTRNN for every modality and the association of the higher level nodes of all modalities into cell assemblies. Thus, this model features hierarchical concept abstraction in sensation as well as concept decomposition in production, multi-modal integration, and self-organisation of latent representations. The main contributions to knowledge from the development and study of these models are as follows: a) general mechanisms on abstracting and self-organising structures from sensory and motor modalities foster the emergence of language acquisition; b) timescales in the brain's language processing are necessary and sufficient for compositionality; and c) shared multi-modal representations are able to integrate novel experience and modulate novel production. The studies in this thesis can inform important future studies in neuroscience on multi-modal integration and development in interactive robotics about hierarchical abstraction in information processing and language understanding.